Humans are increasingly working and living alongside robots, whether on a factory floor or inside a hospital or school. This new breed of social robot must improve people’s work efficiency, and also make them feel happy and entertained in the process. Some social robots take this to the next level by being designed explicitly as robotic companions. For example, robotic pets have been found to improve the psychological well-being of dementia patients, and social robots can be effective teachers for children with autism. However, there is still limited research quantifying the potential emotional benefits of interaction with a robot, as well as determining which factors are most important for driving this phenomenon.

Watch our video about Sophia’s facial expression capabilities here:

Facial Recognition

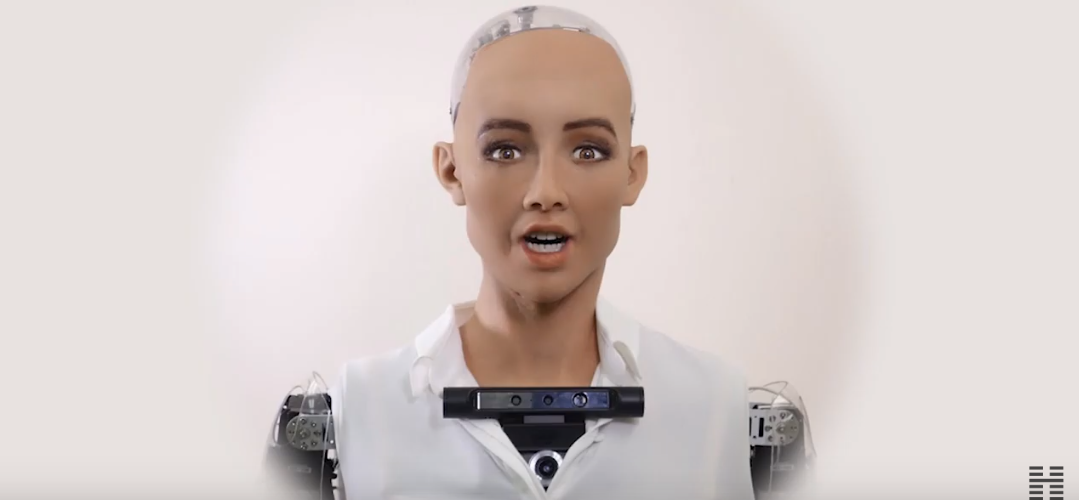

To help close this gap in the research, Sophia the Robot, a social human-like robot from Hanson Robotics, teamed up with the Loving AI project. Sophia participated in a study which tested whether interacting with an artificially intelligent robot can induce feelings of love and self-transcendence in humans. This study focused on Sophia’s facial recognition capabilities, as well as the effect of the robot’s ability to perceive and react to human expressions of emotion, including facial expressions. This is an essential area of research in social robotics since humans use facial expressions to communicate and form emotional bonds.

For this study, Sophia was able to recognize human facial expressions using a convolutional neural network— a type of model designed to resemble the way the human brain processes visual information. The model was trained on datasets consisting of tagged photographs of seven emotional states: happiness, sadness, anger, fear, disgust, surprise, and neutral. With the help of her chest and eye cameras, Sophia was able to use her pre-trained neural network model to recognize a person’s facial expressions.

Facial Expressions

This study also took advantage of Sophia’s ability to make a wide range of facial expressions of her own. In a system that mimics human facial musculature, Sophia can make expressions using motor-controlled cords anchored to multiple points underneath her flexible human-like rubber skin. In this study, experimental software was used to trigger Sophia’s facial animations in response to the human expressions detected by the neural network model. In this way, Sophia was able to mimic the expressions of her conversational partners.

The researchers observed each participant during a guided meditation session led by Sophia, either with visuals or audio (voice) only or with or without facial mimicry. They measured each participant’s heart rate, facial expressions, and the self-reported measures of pleasantness, love, happiness, fear, anger, and disgust.

Conclusions

The researchers found that overall participants had higher levels of happiness and love for others, and lower levels of anger and disgust after interacting with Sophia. This effect was more significant for the group with visual access to Sophia compared to the audio-only group. They also found that participants rated conversations higher when the facial synchrony was in use for happy or surprised expressions. However, the conversations were found to be more pleasant when the robot failed to mimic the participant’s fearful expressions.

Human-robot interaction studies such as these can help us better understand how to design robots for improving the emotional well-being of people. This study also demonstrates how social robots can be used to advance knowledge in the field of human psychology. In other words, social robots can act as a mirror to help human beings better understand our own nature. In this way, Sophia is not just a demonstration of Hanson Robotics’ vision for the future of robotics and AI. Sophia, and other robots like her, are also a valuable tool for advancing both basic and applied scientific research.

Read the full study here:

Video and images credits: Hanson Robotics Limited

Recent Comments