Originally posted on February 1, 2021 on Medium by Alishba Imran.

I’ve been working with Hanson Robotics and in collaboration with the International Iberian Nanotechnology Laboratory (INL) & SingularityNet to develop the new Sophia 2020 platform.

Sophia is a humanoid that acts as a platform for advanced robotics and AI research, particularly for understanding human-robot interactions.

The Sophia 2020 platform is a framework for human-like embodied cognition that we’ve developed to help advance Sophia’s expression, manipulation, perception, and design. Our work with Sophia will act as a standard for understanding human-robot interactions and be used during autism treatment and medical depression studies.

In this article, I’ll be highlighting some of the key areas that we’ve developed but if you’d like to go deeper, here are two resources you can look at to learn more:

- The pre-print paper we published outlining this entire platform in more detail. Link.

- AAAS Poster that we presented during the AAAS Annual Meeting. Link.

An Integrative Platform for Embodied Cognition

Sophia 2020 uses expressive human-like robotic faces, arms, locomotion, and uses ML neuro-symbolic AI dialog ensembles, NLP, and NLG tools, within an open creative toolset.

Sensors and Touch Perception

In human emulation robotics, touch perception & stimulation are recognized as critical for the development of new human-inspired cognitive machine learning neural architectures.

Our framework uses a bioinspired reverse micelle self-assembling porous polysiloxane emulsion artificial skin for animated facial mechanisms, increasing naturalism while reducing power requirements by 23x vs. prior materials.

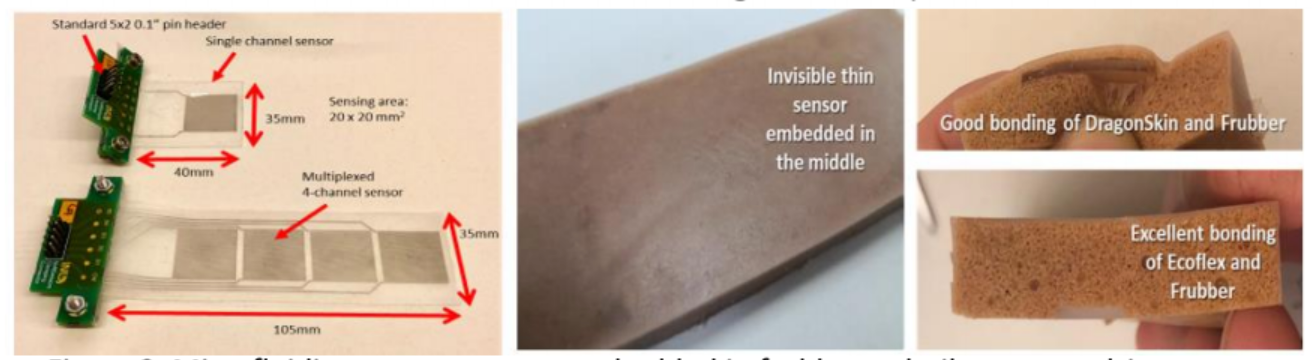

We integrate a highly flexible Frubber skin for ultra-realistic facial expressions but often the usage of traditional rigid tactile sensors hinders the Frubber’s flexibility. To fix this, we devised a new polymeric soft strain and pressure sensor compatible with the Frubber skin, with flexible polymeric rectangular microfluidic channels in polysiloxane substrate, filled with a liquid metal to form a resistive sensor.

As the sensor is pressed or stretched, the deformation of the microfluidic channels alters the resistance proportionally. Microfabricated silicon mold masters produced the sensors microfluidic channels by spin coating the polymer over the master mould, and channels are injected with EGaln liquid metal through the reservoir-aligned vias.

Pressure sensitivities of 0.23%/kPa in the O-40kPa range were measured using a flat 1cm³ probe and incremental weights, agreeing with simulations and this is comparable with typical human interaction forces. These Frubher-compatible sensors pave the way for robots with touch-sensitive flexible skins.

Hands and Arms

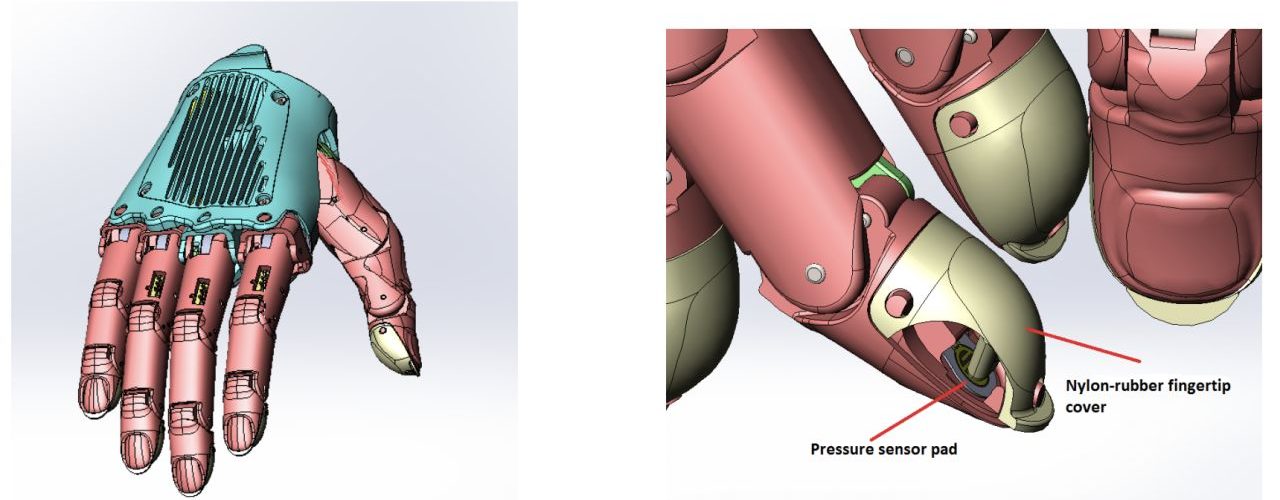

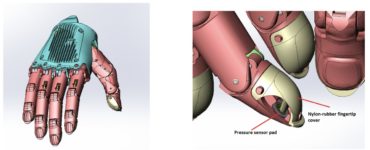

For the platform, we developed novel 14 DOF robotic arms with humanlike proportions, position, and force feedback in every joint, series elastic actuators in all hand DOF, with relatively low-cost manufacturing.

Some of the functions we’ve developed on the arms and hands are:

- PID control (reading the sensor and computing the desired actuator output).

- Servo motors with 360 degrees of position control

- URDF models in several motion control frameworks (Roodle, Gazebo, Moveit).

- Force feedback controls, IK solvers, and PID loops, combining classic motion control with computer animation, wrapped in ROS API.

Grasping Control

We tested visual-servoing for grasping detection using an iteration on the Generative Grasping Convolutional Neural Network (GG-CNN). This algorithm takes in-depth images of objects and predicts the pose of grasps at every pixel for different grasping tasks and objects.

It was able to achieve an 83% grasp success rate on previously unseen objects and 88% on household objects that are moved during the grasp attempt and 81% accuracy when grasping in dynamic clutter. You can learn more about how this works in this article I wrote.

We used classic IK solvers which demonstrated over 98% success in tasks of pick and place, figure drawing, handshaking, and games of baccarat.

Hanson AI SDK

The Hanson-AI SDK includes various perception and control commands. Here are a few of the key features:

Perception:

– Face tracking, recognition, expressions, saliency, gestures, STT, SLAM, etc

– Procedural animation responses to perception: tracking, imitation, saccades

– Gazebo, Blender, and Unity simulations

Robotic controls:

– ROS, IK solver, PID loops, perceptual fusion, logging & debugging tools

– Movie-quality animation, with authoring tools for interactive performances

– Arms & hands: social gestures, rock paper scissors, figure drawing, baccarat

– Dancing, wheeled mobility, walking (KAIST with /UNLV DRC Hubo).

To put this all together, this is an overview of all the key components of Sophia:

Consciousness Experiments

Consciousness is something that is difficult to describe especially within robots. Although there is still a lot of debate around if/how we can measure consciousness we attempted to conduct studies to measure this.

On the Sophia integrated platform, we investigated simplistic signals of consciousness in Sophia’s software, via Giulio Tononi’s Phi coefficient of the integrated information theory. This was created by Univ. Of Wisconsin psychiatrist and neuroscientist Giulio Tononi in 2004. It is an evolving system and calculus for studying and quantifying consciousness. You can read this paper to gain a deeper understanding.

The data used to calculate Phi comprises time-series of Short Term Importance (STI) values corresponding to Atoms (nodes and links) in OpenCog’s Attentional Focus. To make these computations feasible, we preprocessed our data using Independent Component Analysis and fed the reduced set of time series into software that applies known methods for approximating Phi.

During a meditation-Guiding Dialogue, we tracked spreading attention activations as Hanson-Al and OpenCog pursued dialogue goals as Sophia guided human participants through guided meditation sessions.

A comparison of system log files with the Phi time series indicated that Phi was higher soon after the start of more intense verbal interaction and lower while Sophia was watching her subject meditate or breathe deeply, etc.

Looking Ahead

As we move forward, our goal is to test our control system (such as manipulation commands) in precise environments and applications. We will be participating in more studies with humans to understand how robotic interactions impact the mood, behaviour, and actions of humans.

Alongside this, we’re working on developing an organization as governance guidance to Sophia’s evolution. This will be a collaboration of interdisciplinary experts and smart-robot enthusiasts that will approve and select key aspects of Sophia’s development. More on this soon!

If you have questions, feedback, or thoughts about anything, feel free to get in touch here:

Email: alishbai734@gmail.com

Linkedin: Alishba Imran

Twitter: @alishbaimran_

Recent Comments